Grow your business with TopRank Online Marketing tips, articles, & experts interviews on social media, digital PR & search engine marketing. I am Certified Google Adwords Qualified Individual and brings a high level of Search Engine Marketing Expertise. I have extensive experience working with Google SEO & Adwords pay per click accounts.

Monday, October 4, 2010

How to Write Effective Meta Tags for a Website: Importance of Advance Meta Tags

Tuesday, September 28, 2010

7 Simple SEO Tasks to Complete for a New Site

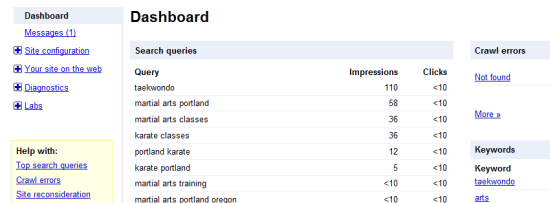

1. Setup Webmaster Tools

Using a service like Google Analytics is great, but you really should verify your site with each of the search engines webmaster tools. These services allow you to diagnose and analyze things on your site, that a website analytics solution can not. Submit sitemaps, find error pages, diagnose slow loading pages, and tons of other neat tools are provided by the webmaster tools.

2. Install Analytics, Setup Your Goals

There is no reason why you should not be tracking your website visitors. Google Analytics is free, easy to install and provides a wealth of information about your website and your customers. Sign-Up for a free account and install or pay someone to install the javascript code on your website.Also go the extra mile and track key actions on your websites. Things like contact form submissions, downloads, video plays, and purchases should be getting tracked to understand your conversion funnel better and improve your conversion rates.

3. Fix Canonical URL Issues

Something that I would say 90% of all websites never do, it fix the www vs non-www duplicate content issue. This is such a quick fix, but so many websites overlook it. Going with either the www or the non-www, will solve the issue of having multiple URLs for the same web page. Also make sure your internal links are going to the / and on index.html or similar.PHP Sites: Add to .htaccess file

Options +FollowSymlinks

RewriteEngine on

RewriteCond %{HTTP_HOST} ^domain.com

RewriteRule ^(.*)$ http://www.domain.com/$1 [L,R=301]

RewriteRule ^index.html$ / [R=301,L]

ASP Sites: Done through IIS

4. Add Your Robots.txt File

Go through your web files and identify directories that you don’t want the search engines to index. Things like your administration files or miscellaneous files found on your server, restrict them from being indexed.Upload robots.txt file with below text. Make sure you replace “/folder name/ with the folder(s) you want to restrict.

User-agent: *

Disallow: /folder name/

5. Submit XML Sitemap

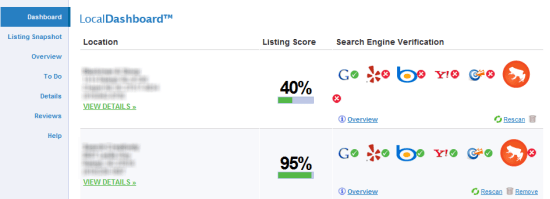

Once your website is 100% completed, use an XML sitemap generation tool to crawl your entire site and create an XML sitemap that you can submit to the search engines. Each search engine allows you to submit your sitemap right from within Webmaster Tools. This will help get your new site crawled faster and make the search engines better able to find ALL of the pages on your site.6. Setup Your Local Business Listings

As you may know, Google, Yahoo, and Bing all have local business centers that allow you to add a free business listing that has the chance to show up in the local results when a user performs a local query in one of the search engines.I would suggest going to GetListed to help manage all of your local business listings. This free service will help you manage and check to make sure you are properly submitting and verifying your local business listings in Google, Yahoo, Bing, Yelp, BOTW and Hotfrog.

7. Add 301 Redirects

If you are redesigning a website, try to keep the same URL structure if possible. This way you will reduce the risk of losing any organic search engine rankings and traffic. However if for whatever reason the URL structure needs to change, identify the key pages that are ranking well and gaining traffic and 301 redirect them to the most relevant page on the new site.If a client has purchased multiple domains and has mirror sites up, direct those sites over to the main site. There is no point in risking a duplicate content penalty for having multiple sites with the same content.

ASP Sites – 301 Redirect

PHP Sites – 301 Redirect

Header( “HTTP/1.1 301 Moved Permanently” );

Header( “Location: http://www.new-url.com” );

?>

Read more: http://www.searchenginejournal.com/7-simple-seo-tasks-to-complete-for-a-new-site/24419/#ixzz10q1bYM9G

Monday, September 6, 2010

What’s New With AdWords?

This tool, which is currently in beta, lets you test and measure changes to your keywords, your AdWords bidding, ad groups and placements. Basically you run your existing campaign alongside an experimental campaign.

You choose what percentage of auctions you’d like each campaign to participate in, and then watch what happens. If your experimental campaign is significantly more successful than your original campaign, you can decide to apply the changes to all of your auctions.

Google has added a new tool to the AdWords Opportunities tab that allows you to see how your campaign performance compares to the average performance of other advertisers. Google measures such indicators as click-through rate, average position, and impressions.

It shows these metrics for each of the different categories that represent your offerings. It can help you identify which aspects of your campaign are inferior to your competition, and then prompt you to improve those aspects accordingly.

Ad Sitelinks let you add additional links to pages within your site in your ads, provided your ads appear at the top of search results. The idea is that more people will click through to your site if you offer them more options. The feature was introduced in November, though this summer Google add a couple of new characteristics.

One new characteristic is that additional links can be condensed into one line of text (previously the only option was two lines). The other change is that advertisers no longer need Google’s approval to set up Ad Sitelinks for their campaigns. You can set up Ad Sitelinks in the Campaign Settings tab.

This new tool lets you see which of your pay-per-click keywords are currently prompting your ads to show, and why the other keywords aren’t spurring ads. You can access it from the More Actions drop-down menu within the Keyword tab.

If you want you can limit your diagnosis to a particular country and/or language. If you are seeing that certain keywords are not resulting in ads because of Quality Score issues, you might decide to resolve those issues. Or you might choose to increase your bids to get your ads shown.

This new AdWords management feature lets you create keywords that are more targeted than broad match and have a greater reach than phrase or exact match. To implement this feature, you put a plus sign (+) in front of one or more words in a broad match keyword. Each word following a (+) sign must appear in the user’s query exactly or as a close variation.

The words that are not preceded by a (+) sign will prompt ads on more significant query variations. This feature will likely drive more traffic for those switching from broad match, and attract more qualified traffic for those switching from phrase or exact match.

The AdWords Report Center is slowly being phased out as performance reports are moved onto the Campaigns tab. According to Google, it’s best to put performance information on the same page where you manage your campaign.

Reports include campaign reports, ad group reports, and account-level reports. They will specifically be stored in a new part of the Campaigns tab called the Control panel and library.

In June Google unveiled Google Ad News, a website that aggregates advertising news, including news related to AdWords. The site is organized into advertising categories, including search advertising; mobile advertising; and TV, radio and print.

For advertisers and advertising professionals with little time to sift through the categories, a top advertising news category provides Google’s most valued advertising-related articles. Articles come from such publications as The Detroit News, Business Week, and The Guardian.

Sunday, August 15, 2010

The Top 10 Free PPC Tools

- Google Ad Words Editor – This is a great time saver for building and setting up huge campaigns.

- Google Website Optimizer – This tool allows you to test and optimize site content and design.

- Google Analytics – Provides much of what an expensive analytics package can for free.

- Google Insights for Search – A great keyword tool for determining seasonality and trends.

- Google Mobile Ad Preview Tool – Offers a quick way to see your ads on mobile devices.

- Other Google tools include: Google traffic estimator, Google Alerts, Google Trends, Google Ad Planner, Google URL Builder and most recently the competitive tools through the Ad Words dashboard.

Monday, August 2, 2010

Five Steps to an Effective Pay Per Click Keyword Database

A keyword database is a completely different approach to research and manage PPC keywords. Compared to a keyword list, it’s:

• Easier to organize and manage

• Easier to update and expand

• More actionable

• Collaborative

Building a keyword database isn’t difficult, and as your campaigns scale, you’ll find it much faster and easier to keep things running smoothly. Here’s the five-step process to build an effective keyword database.

Step 1: Start your PPC keyword research

The most important part of a keyword database, naturally, is keywords! To build a comprehensive, up-to-date database, it’s important to look at keyword research as an ongoing process, aggregating keywords from multiple sources.

Here are four sources, both public and private, that will help you gain a complete picture of the terms you should be using in your campaigns:

• Historical site logs: Your server logs are a great source of keyword data—they contain a record of the real search queries that have led people to your site.

• Web analytics: The keyword reports in your analytics provide a continuous stream of new keywords. Incorporate those new insights into your research.

• Search query reports: The search query reports in AdWords Editor are another source of real data. These tell you the actual search queries that have triggered your PPC ads.

Step 2: Segment and organize your keywords

Better keyword research gets you a lot closer to more profitable PPC campaigns, but to reap the full benefits of your research, it’s crucial to organize your keywords into small, manageable groups of closely related terms. This process will improve your campaigns by enabling:

• Better ads: Similarly, you can quickly write relevant, compelling text ads for well-structured keyword groups (aka ad groups).

• Better click-through rates: More relevant pages and ads grab a more qualified audience, so your CTRs and conversion rates improve.

• Better Quality Scores: High CTR and relevance lead to high Quality Scores, so you pay less for better positioning.

Step 3: Cut out waste with negative keywords

With strong keyword research, you can identify profitable keyword opportunities. But for high ROI, it’s equally important to identify and eliminate waste. This means discovering negative keywords, or irrelevant terms that eat up pay-per-click advertising budgets without generating quality leads.

Here are a few ways to find negative keyword candidates:

• During regular keyword research: When looking for relevant keywords, also keep your eyes open for suggestions that aren’t relevant to your business.

• Search query reports: Regularly look through your search query reports in AdWords and remove irrelevant keywords from your ad groups.

• Organic log files: By using your own log files for negative keyword discovery, you can catch irrelevant keywords before they trigger your ads.

The next step is to write text ads for each keyword/ad group. If you followed the above process, your ad groups are already highly targeted, so it should be simple to write strong, targeted ads. Here are some tips for writing effective PPC ad copy:

• Don’t overgeneralize: address a specific segment of your audience.

• Test several ads for each ad group. Google will rotate the ads so you can see which works best.

• Always include a call to action.

Step 5: Repeat as necessary to maintain gains

One of the benefits of a keyword database is the ability to expand your research without losing control. So keep monitoring, testing, and tweaking your campaigns to improve results. And keep adding keywords from your analytics! The keywords your clients use to find you are among your most valuable marketing assets.

Tuesday, July 27, 2010

Pay Per Click Advertising and PPC Management Tips

Here are some Pay Per Click advertising tips that will help you get started:

1. Establish a Budget – This may seem simple and obvious but creating a budget and sticking to it is the best way to keep from overspending and falling victim to bidding wars for popular search terms.

2. Develop a PPC Marketing Plan – Creating a plan in the early stages gives you a template to follow which should include thorough research of your industry, your competition, and a prioritized list of your targeted keywords and phrases.

3. Consider your Target Audience – Spend some time assessing who you want to click on your ad, the search terms they are likely to use, and how broad or narrow your intended demographic may be. Determining your audience in the early stages will help to inform your decisions in every phase of the campaign.

4. Identify Niche Opportunities – Instead of focusing on the most popular search terms where costs and competition will be steep, think about specific two- and three-word phrases that may have been overlooked by your competitors.

5. Write Compelling Ad Copy – You have a finite amount of space, so choose every word carefully to entice your audience to click on your ad. Most web users know the difference between sponsored and organic search results but if your ad copy leads them to believe that the link will help them find what they’re looking for, your click-through rates and sales will take off.

6. Craft Custom Landing Pages – This is essential. Each ad must take the viewer to a landing page where they can quickly and easily find what they need. Always remember that web users scan content and the decision to stay or look elsewhere is made in seconds.

7. Create and Analyze Performance Reports – These reports will tell you which ads are underperforming and which are exceeding expectations. Use this data to fine tune and adjust your marketing plan. Track your results at regular intervals.

Monday, July 12, 2010

PPC Tips for the Small Business Owner

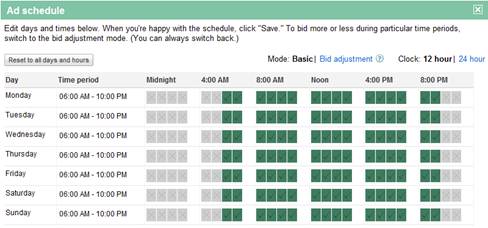

Time/Day Parting – Google allows advertisers to determine the day of week or even the time of day they want their ads to display. This can be a very useful tool for small business advertisers who know when their customers are most likely to look for their product or service. By setting your ads to display at only high performance times you increase the effectiveness of your campaign and reduce your costs.

Match Types – Gone are the days of simply adding keywords that are all set to broad match and hoping for a return. Sure, casting a larger net brings in more traffic, but it also punishes your budget. The quality of that traffic might also suffer depending on the chosen keywords. Try using the available match types, especially exact match, to control costs and which searches are actually displaying your ads.

Negative Keywords – Selecting negative keywords to offset unwanted traffic is an absolute must for any campaign, large or small. For example, f a florist that sells only fresh flowers and plants might want to include the negative “artificial” to prevent traffic from artificial flower type keywords. Using negatives also allows you to pursue higher volume keywords without the exposure to unrelated searches.

Geo Targeting – According to a 2009 TMP/comScore report, 80% of consumers expect businesses in their search results to be within 15 miles of their location. This definitely doesn’t apply to all industries and regions, but it should provide a starting point with your targeting. It’s important to mention that with Google’s targeting granularity, you could potentially target your business out of traffic if it’s set so small that only a few people will see the ads.

Other Search Engines- Google has the lion share of the search market, but they also carry the highest bid prices. Bing and the like might not offer the volume that Google does; however, smaller search engines often have lower bid prices, which ultimately produce lower conversion costs. Test out a small budget on these other engines to start looking at your PPC efforts as a portfolio.

Keyword Development–The Google traffic estimator tool might show you that the keyword “new shoes” generates 1,500,000 searches each month, but that doesn’t mean it’s a quality keyword for your ad group. To maximize your budget, think locally and specifically. For example, if you’re in the printing business, expand past the generic and more expensive “printing” keywords; pair them up with location identifiers to better define your product and audience. By using the keyword ”printing shops Denver 80202,” you pay less for a consumer that is further along in the buying cycle.

Thursday, June 17, 2010

Tips for Google Site and Category Exclusion Tool

Targeting Google AdWords contextual advertising campaigns just got easier. This new tool keeps your ads from appearing in some pretty dodgy places online.

Google launched an important new tool that prevents your ads from showing on poorly-performing sites: the Category Exclusion tool.

Remember why content campaigns drain ad dollars? You waste budget because contextual ads appear on sites that are "poor quality," meaning visitors to those sites are not likely to convert, even if your ads garner clicks.

We've discussed several strategies for controlling which sites carry your ads. The Category Exclusion tool simplifies the job by allowing advertisers to exclude whole swaths of site types.

To find the tool, click on Tools under Campaign Management. First you're allowed to choose a campaign. Though you can choose search campaigns, you shouldn't -- the tool really only acts on keyword-targeted and placement-targeted content campaigns.

Having chosen a campaign, you'll see the familiar site exclusion text field where you can type or paste the specific domain names of sites that shouldn't carry your ads. But you'll see two additional tabs: Topics and Page Types. Let's start with Topics. Here's an example of what you'll see:

Here's Google's explanation for each of the topics that can be excluded:

Conflict and tragedy

- Crime, police, and emergency: Police blotters, news stories on fires, and emergency services resources

- Death and tragedy: Obituaries, bereavement services, accounts of natural disasters, and accidents

- Military and international conflict: News about war, terrorism, and sensitive international relations

Edgy content

- Juvenile, gross, and bizarre content: Jokes, weird pictures, and videos of stunts

- Profanity and rough language: Moderate use of profane language

- Sexually suggestive content: Provocative pictures and text

But Google doesn't trust its intuition, or yours, to lead to an intelligent decision about which site topics to exclude. The tool shows you, based on your campaign's history, exactly what you're risking by excluding sites within each topic.

In the example above, the advertiser would probably be wise to exclude sites in the crime topic, since the CTR has been a dismal .52%, with no conversions. But the advertiser might think twice before excluding sites in the juvenile topic, since despite the poor CTR, clicks from that site are converting at a respectable 7.41%. Notice that cost-per-conversion data is also available, so advertisers can make sure their decisions are likely to result in acceptable ROI .

Let's turn now to the Page Types tab:

Here the advertiser is presented with a range of page types that have traditionally yielded poor results for some advertisers. Again, Google's explanation of each type:

Network types

- Parked domains are sites in Google's AdSense for domains network. Users are brought to parked domain sites when they enter the URL of an undeveloped Web page into a browser's address bar. There, they'll see ads relevant to the terminology in the URL they entered. The AdSense for domains network is encompassed by both the content network and the search network. If you exclude this page type, you'll exclude all parked domain sites, including the ones on the search network.

- Error pages are part of Google's AdSense for errors network. Certain users are brought to error pages when they enter a search query or unregistered URL in a browser's address bar. There, they'll see ads relevant to the search query or URL they entered.

User-Generated Content

- Forums are Web sites devoted to open discussion of a topic.

- Social networks are Web sites offering an interactive network of friends with personal profiles.

- Image-sharing pages allow users to upload and view images.

- Video-sharing pages allow users to view uploaded videos.

This data is fascinating because it illustrates something I've been hearing from Google for some time: it's not uncommon for pages/sites like parked domains (arbitrageurs) and error pages to yield good-to-excellent CTRs and conversion rates. In the example above, only social network pages yielded poor results, while the others produced results that rivaled the best search campaigns.

So, use the tool to further fine-tune your content campaigns -- but watch out for these caveats:

- Not all languages are supported -- at the moment only Dutch, English, French, German, Italian, Portuguese, and Spanish sites can be excluded

- The tool is not infallible -- so continue to run Placement performance reports and use the Site Exclusion tool to opt out of poorly-performing sites/pages.

Wednesday, June 16, 2010

5 Ways to Kill Your Search Rankings & Their Solutions

One of the biggest fears for web site owners that have long relied on search traffic for new business is a sudden drop in search engine rankings. Some webmasters are experiencing this very situation as a result of Google’s recent Mayday update (Matt Cutts video).

One of the biggest fears for web site owners that have long relied on search traffic for new business is a sudden drop in search engine rankings. Some webmasters are experiencing this very situation as a result of Google’s recent Mayday update (Matt Cutts video).

In most cases, it takes a lot for a tenured web site to mess up its search visibility. In other situations, it doesn’t take much at all. Avoiding mistakes that result in exclusion, penalties and more often confusion for search engines are often overlooked. Don’t fall victim to carelessness and ignorance when it comes to maintaining the search visibility achieved from years of content and online marketing by avoiding these common mistakes:

1. Website Redesign

Probably one of the most common situations that result in fluctuations in search visibility involve significant changes to a web site’s design, content, internal linking relationships and the new use Flash, Ajax or JavaScript for navigation. Search engines copy websites and the links between pages. Think of it as taking a picture of your site. If you change your site from what the search engine has a copy of, the new form might not include the same content, keywords and crawlable links.

The worst case scenario is when a company decides to redesign the website and over write all previous SEO work. Upon finding that search visibility has completely tanked, they call up the SEO agency and demand an explanation.

Solution: When significant changes are planned for the company website, work with your SEO to identify how the new design will impact search visibility. Have them map out and prioritize the implications of page layout, content and keyword usage, navigation, links and redirects.

2. New Content Management System (CMS)

Along the lines with a new website design, changing content management systems can create a lot of confusion for search engines. Many companies have had websites long enough that the legacy CMS used to launch the site no longer serves the needs of the organization. Large companies may find that the hodgepodge of CMS used by different business units and acquired companies is inefficient and a common content management system would better serve the organization.

A change in the CMS means a change in the templates that format web pages, navigation and oftentimes the URL structure of pages. It’s common that major changes in content are rolled out along with new website software and that can spell confusion for search engines. URLs that change can also create confusion. For example, web page file names that previously ended with .asp and now end with .aspx are perceived as completely different.

Solution: While the IT department or web developer will understand the importance of redirecting old URLs to their new counterparts, execution in a search engine friendly manner is another thing entirely. 302 vs. 301 redirects and mapping URLs when there is no logical page in the new system are essential. Identifying the top sources of inbound link traffic to pages and conducting an outreach program to get them to change the URLs other sites use to link to your site is a specialty area for link building SEOs moreso than IT. Simply put, make sure you have a SEO migration plan.

3. Loss of Inbound Links

In the SEO game content is King and links are the Queen. Or content is the Yin and Links are the Yang. Whatever the metaphor, links are an essential mechanism for search engines to discover pages and signal for ranking them. Companies that proactively acquire links organically, or that earn vs. buy the links, don’t have much of a problem in this area. The longer other websites link to your site, the better. But some sites may go offline temporarily or permanently. A blog may decide to remove it’s blogroll or a site may simply decide to remove links to your site. If you change your CMS as noted above, other sites that don’t know this will continue to link to your old URL format (.asp vs .aspx) and that will appear as a loss of links. If you buy links from other sites and they are detected by search engines, those links may be devalued of any PageRank. There are many reasons for link loss.

Solution: Active content creation, promotion and social participation are essential for building a significant and relevant inbound link footprint on the web. Those links will drive traffic and serve as a signal to search engines for ranking your content in the search results. The key is to monitor your link footprint on an ongoing basis using link building tools that will identify major fluctuations in inbound link counts. Then you can drill down to see where the link loss has occurred and see if you can do something about it. The best defense is offense, so make sure you have an active link acquisition in place so minor to moderate fluctuations in links will have little, if any effect.

4. Duplicate Content

Serving up duplicate content using different URLs confuses search engines. This can happen when sites use queries on a database to display lists of products in a category that can be reached multiple ways. Printer friendly versions of pages, other English language versions of pages or outright copying content from one website to another can all cause duplicate content issues. When an search engine is presented with multiple versions of the same content, it must decide which is the original or canonical version, since engines do not want to show the exact same thing to users in the search results. Anything your website does to make that process confusing or inefficient can result in poor search performance for your web site.

Solution: A professional SEO working with website content managers can help manage broader duplicate content issues for a company website and any micro sites they’re publishing. With press releases, RSS feeds or articles that are syndicated, it’s a best practice to make sure the original is published on your site first, then to have any duplicates clearly link back to the original. Ongoing monitoring can also help with unintentional duplicate content issues caused by other sites scraping your site’s content.

5. You’ve Become a SEO Spammer!

As more content is published and promoted online, more websites are launched and more competition comes into the market, companies will be tempted to achieve the coveted first page listing at any cost. Many companies that succumb to this temptation do so because of seeing their competition get away with tactics that are clearly more aggressive and manipulative than search engines allow. Webmasters might see suggestions in forums (often disinformation) or get advice from others doing well in the disposable site, content monetization game.

Engaging in simple things like hidden keywords, redirecting pages to present one version to search engine bots and another to site visitors or publishing numerous copies of the exact same web site using search/replace keyword optimization can all result in negative effects. There are far more aggressive tactics considered spam than that of course, but SEO spam isn’t an area we work with and I’m not interested in promoting unsustainable, high risk tactics.

Solution: Understand the webmaster guidelines from each search engine: Google, Yahoo, Bing. Don’t violate those policies with the site(s) that are your bread and butter. If you must test, do so with other websites that are not going to affect your business. Rather than focusing on loopholes and exploits, be a better marketer and understand what your target audience wants, what influentials respond to and develop smarter, more creative marketing that can stand on its own to drive traffic and sales. Include SEO in those “UnGoogled efforts” and you’ll realize the added benefit of great performance from your website in search engines as well.

Friday, June 11, 2010

Introducing The All-Important Quality Score

Throughout this column, the phrase “Quality Score” has popped up on different occasions. Understanding Quality Score is fundamental for successful paid search campaigns, but can be a difficult concept for beginners to understand. So the time has come to cover this topic so you can use Quality Score to your advantage and not fall into its traps.

The early days before Quality Score

In the beginning of paid search, auctions existed in a purely capitalistic marketplace where the advertiser who was willing to pay the most for a keyword was awarded first place, the second highest-paying advertiser’s ads were second, and so forth.

It was an easy system to understand and it made sense. However, the ad space became a bit muddled. Advertisers with deep pockets literally took over the search engine results pages on keywords that weren’t necessarily related to their business. For example, a global soft drink company could start to take over terms such as rock and roll, skateboards, or even Britney Spears. These advertisers mostly weren’t trying to cause chaos—they were just trying to reach their target demographic. But in doing so, the sponsored search results for some keywords could potentially contain no results directly related to what a searcher was looking for.

The engines realized that this could be a problem. Google has long maintained that the best thing for its business is to keep results as relevant as possible—including paid listings. To improve the relevance of paid search ads, Google created the Quality Score. Microsoft and Yahoo soon followed with their own systems to improve relevance of ads.

So what is Quality Score?

Simply put, Quality Score is a numeric grade from one to ten (ten being best) assigned to each of your keyword/ad/landing page combinations. The score is updated frequently, calculated at each time your keyword is searched on to account for changes to your terms and creatives. So what’s the value of a high Quality Score? If your keyword is deemed highly relevant, Google will lower your cost-per-click and rank your ad higher than other competitors even if they’re bidding more for those terms. On the flip side, a keyword with a low Quality Score may mean you have to bid a premium price to even appear on a search result page.

How is Quality Score calculated?

We don’t know exactly what goes into the Quality Score black box but we do know that high click-thru-rates (CTRs) are a big piece of the pie. The engines probably correlate high number of clicks as “virtual votes” by past users for the advertiser and figure a good CTR means a relevant keyword.

Google does offer some insight into how Quality Score is calculated in the AdWords help area:

While we continue to refine our Quality Score formulas for Google and the Search Network, the core components remain more or less the same:

- The historical clickthrough rate (CTR) of the keyword and the matched ad on Google; note that CTR on the Google Network only ever impacts Quality Score on the Google Network — not on Google

- Your account history, which is measured by the CTR of all the ads and keywords in your account

- The historical CTR of the display URLs in the ad group

- The quality of your landing page

- The relevance of the keyword to the ads in its ad group

- The relevance of the keyword and the matched ad to the search query

- Your account’s performance in the geographical region where the ad will be shown

- Other relevance factors

Note that there are slight variations to the Quality Score formula when it affects ad position and first page bid:

- For calculating a keyword-targeted ad’s position, landing page quality is not a factor. Also, when calculating ad position on a Search Network placement, Quality Score considers the CTR on that particular placement in addition to CTR on Google.

- For calculating first page bid, Quality Score doesn’t consider the matched ad or search query, since this estimate appears as a metric in your account and doesn’t vary per search query.

What do you really need to know about Quality Score?

You’ll have plenty of time to test various tactics to improve Quality Score once the campaign starts, so don’t waste too much time worrying about it right now. The one thing you do need to take into account though is to make sure you keep your ad groups tightly focused. Because click-thru-rate is the biggest weighting factor to Quality Score, you’ll want to make sure that your ads are highly relevant to the keywords in each group so that you get the best CTR as possible. In my experience, as long as you’re getting a good percentage of clicks to impressions, the engines will consider your terms relevant enough to gain fairly high scores. After that, there are just a few things to keep in mind such as ensuring that your landing pages are loading at a good speed and you have some of your ad group’s keywords in your ad text. You do those things right and your Quality Score should be just fine.

Overall, my best advice is to not obsess over Quality Score. There are a couple hundred other factors to PPC success that are just as important. The important thing now is to just make sure you understand the concept fully and do some extra research if you feel you still need some clarification.

The premise that ad popularity and a high quality score leads to improved search advertising results may or may not be true….especially for B2B advertisers. I urge business marketers to challenge this assumption and understand the relationship between PPC quality score and ROI-based results.

Quality Score algorithms

In simple terms, ad position is primarily determined by how much an advertiser is willing to pay for each click (i.e. your bid) and the popularity, orclick-through-rate (CTR), associated with your ad. Relevancy also plays a role… and very poor landing pages can lead to penalties.

More information can be found here on Google Quality Score, and Yahoo Quality Index. Details aside, Quality Score causes most advertisers to try and maximize response, or click-through-rate.

Note: Not surprisingly, this methodology also increases the click charges that go into the pockets of search networks!

Tips on PPC targeting

All advertisers should be deploying these PPC best practices to accurately target your audience:

- Select very specific keywords and long tail keyword phrases

- Utilize keyword match-types

- Deploy negative keywords

- Test geo-targeted ads (even for national brands)

- Implement day-parting

This week’s question: ”What have you heard about Quality Score?”